But for one reason or another I always come back to by old byddy NUnit. It's probably the familiarity and that the features that once did draw me to MbUnit like parameterized/data-driven tests are now available with NUnit.

All that being said, there's some stuff that always kinda irked me with NUnit, and most of the apply to the others to.

- Test cases are named after their fixture, not after the thing they're verifying.

- Nesting fixtures to provide context and logical grouping don't really work.

- Test names tend to duplicate part of the code since assertion messages tells me the values, but not how they were obtained

1) Test case names

The majority of unit-tests written are either targetting a single class, or targetting that class in some context. Fixture names tend to be akin to "FooTests" or "FooWhenBarTests" and are dutifully rendered as such often including a namespace with "Tests" in it. Quite typically it looks like this when looking at a single test caseAcme.Tests.BowlingTests.Score_should_return_0_for_gutter_game

Now that's just plain silly! Because what happens is that for every single such case we go and mentally remap the class under test to "Acme.Bowling", so why not show that instead? Maybe providing some extra context on the side?

2) Nested Fixtures

If you've ever tried to nest fixtures in NUnit to create a logical grouping for tests then you're familiar with testnames like"Acme.Tests.FooTests+BarTests.oh_my_god"oh, these also end up not being nested but as sibling to all your other tests.

3) Test Name duplicate assertions, because assertions only gives you the values

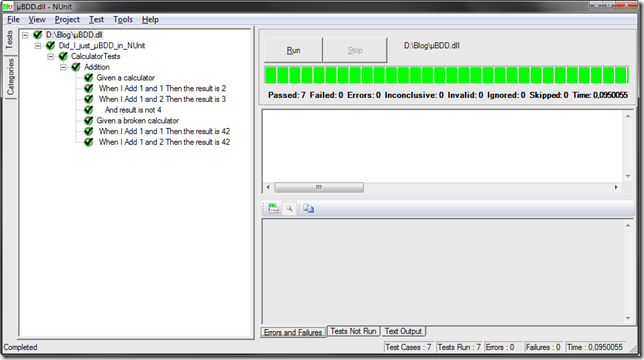

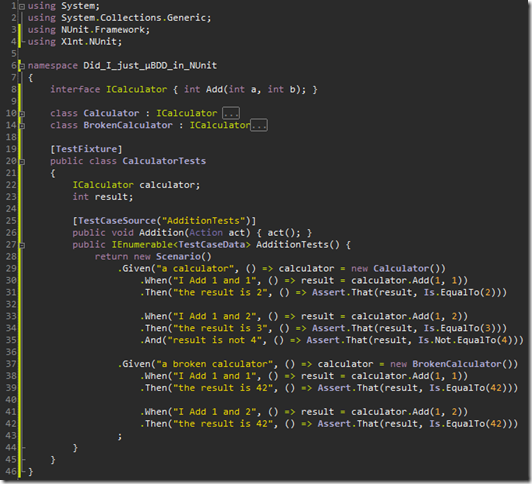

If you lean to classicist state based testing this happens quite a lot, in order to give enough context to make a failing test immediately obvious you either have to write a custom assert message or add something to your test name, depending on your asserts/test either works, both feels like a hack.Since I'm having a vacation and renovating. I spent a few hours watching the paint dry and hacking a NUnit addin to solve those issues. The result, henceforth called Cone, solves those issues for the majority of cases. The picture below should give you a feel for how it looks and feel.

Things worth noting:

Things worth noting:

- Fixture names are derived from the Described type, not from the fixture name

- Underscores are translated to whitespace to aid scanability

- The actual failing expression "bowling.Score" is shown in the Assertion message